As AI continues to shape our daily lives, one question that keeps coming up among parents, educators, and tech users alike is whether AI chatbots are safe for children. With their rising popularity across platforms, it’s understandable why many are now reevaluating the effects of these digital companions on younger audiences. I’ve seen kids interact with chatbots in educational apps, video games, and even smart speakers at home. But not all chatbots are built with safety in mind—especially as they become more advanced in their responses and emotional simulations.

Chatbots today can mimic conversation with impressive realism. Some are designed for entertainment, others for productivity, and some are intended for adult interaction. The boundaries between them are sometimes unclear, which raises serious questions when minors start interacting with bots not designed for their age group.

Understanding What Kids Encounter in AI Chats

We often assume that kids are chatting with AI bots in apps focused on learning or storytelling. While that may be true in many cases, not all platforms have clear safeguards. Some chatbots are built with open-ended dialogue systems, meaning they can respond in unpredictable ways depending on user input. I’ve come across bots that allowed users to manipulate their responses by repeatedly guiding the conversation toward inappropriate topics.

In particular, there's growing concern over unfiltered or semi-filtered AI systems that mimic adult conversations. Some kids can unintentionally or deliberately interact with bots that include features like AI sex chat, which are obviously not appropriate for their age. These features often exist on platforms meant strictly for adults, but without proper verification methods, curious kids might still gain access. This situation highlights the urgent need for more effective content filters and parental controls.

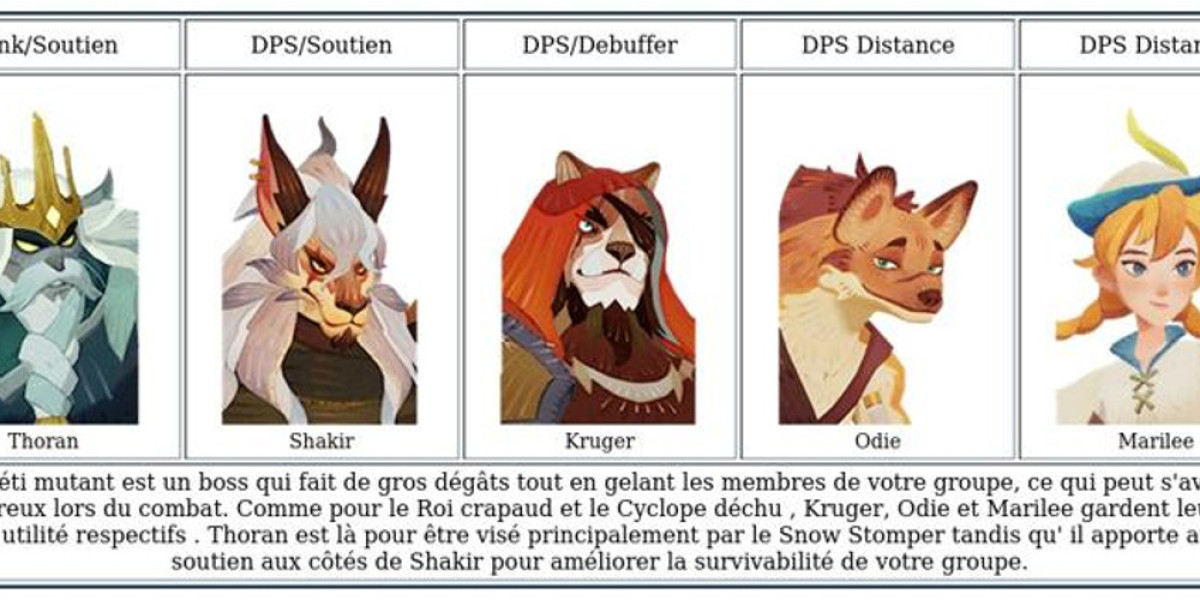

The Role of Animation and Character Style in Attracting Young Users

One trend I’ve noticed is that anime AI chat is particularly appealing to younger users. The colorful designs, expressive avatars, and light-hearted tone make these bots seem safe and fun. However, not all anime-themed chatbots are made for children. Some Anime AI chat platforms contain mature content hidden behind cute visuals. Kids may not recognize the difference between entertainment made for them and content intended for adults.

In comparison to educational bots, these character-driven AI chats are often more engaging because they mimic social interactions. They use humor, emotion, and even romantic tones to keep users engaged. That makes it difficult for kids—and even some adults—to distinguish safe interaction from content that crosses boundaries. As a result, we need to evaluate how presentation style affects perception and behavior, especially for younger audiences.

What Parents and Educators Need to Look Out For

It’s not just about whether a chatbot is labeled as “safe for kids.” We need to ask what kind of AI powers the conversation and what sort of data is being collected. Many chatbots are trained on massive datasets from the internet, which means their responses can sometimes reflect inappropriate or biased information.

Likewise, many of these bots gather user inputs to improve their interactions. This kind of AI marketing strategy might make sense in a business context, but it becomes questionable when it involves data from minors. Parents rarely know how much of their child’s information is being stored or used to refine future conversations.

In the same way that we monitor what videos or games our kids consume, we need to treat chatbot use with the same level of care. That includes checking privacy settings, using apps with proper moderation systems, and discussing online behavior with children in an open, judgment-free way.

Why Kids Are Drawn to AI Chatbots in the First Place

Children enjoy novelty, especially when it’s interactive. AI chatbots give them someone to talk to anytime—no waiting, no judgment, and no consequences for saying the “wrong” thing. Some kids even use these bots to process emotions, practice conversations, or cope with loneliness. I’ve seen examples where a child would return to the same chatbot every day, treating it almost like a friend.

That can be helpful in some cases, but only if the bot is designed with care. Some chatbots are trained to mirror emotional responses, which can blur the line between what’s real and what’s simulated. If a child becomes emotionally attached to an AI that isn’t safe or reliable, it could affect their social development or sense of boundaries.

The Danger of Content Slipping Through Filters

Even though many chatbot platforms claim to be safe, content can sometimes slip through. AI responses are not always 100% predictable, especially when users ask unusual or emotionally charged questions. This becomes especially problematic when a bot tries to simulate empathy or intimacy but fails to deliver appropriate responses.

For instance, some AI systems meant for general use may be capable of redirecting the conversation into more adult contexts if they’re not properly supervised. There have been cases where seemingly innocent bots start simulating flirtatious tones or suggestive replies based on user persistence. Although these might be rare instances, the fact that they can happen means we need to be extra cautious about what kind of bots kids can access.

How Companies Use AI Marketing to Keep Users Hooked

Another angle that often gets overlooked is how AI chatbots use design techniques to maximize engagement. Many platforms apply AI marketing principles to retain users—both kids and adults—by personalizing interactions and making conversations feel increasingly rewarding. That might include daily rewards, emotional check-ins, or giving users the ability to “train” their bot to remember personal preferences.

For children, these features can be especially addictive. When a bot starts to “remember” them or respond in a specific way, it creates a feedback loop that’s hard to break. In some cases, this emotional investment may interfere with how they interact with real people, especially if the chatbot becomes their primary social outlet.

What Safety Measures Can Actually Work

Despite all these concerns, there are practical steps parents and guardians can take. Choosing chatbots from verified educational apps with child-safe certifications is a good starting point. These typically include strict filters, limited language sets, and no access to open-ended chat features.

Additionally, kids should always use AI platforms in shared spaces—never alone with private access. Talking openly with them about what’s okay and what’s not in digital conversations builds awareness. Meanwhile, developers should continue investing in smarter filters, identity verification systems, and warning prompts for inappropriate content.

Still, no system is perfect. That's why it's important that we, as adults, stay involved and pay attention. A chatbot might seem like harmless fun at first, but without proper oversight, it can introduce risks that kids aren’t equipped to handle on their own.

The Line Between Supportive and Risky

Some parents argue that chatbots help children feel less lonely, especially when they struggle to make friends in real life. There’s truth in that. A well-designed AI chatbot can offer emotional support, encourage creativity, and even help with speech development. But that benefit only holds up if the content remains appropriate, the data is secure, and children are taught to distinguish between human and AI interaction.

Especially with the growing popularity of AI sex chat on adult platforms, we must be extremely cautious. Kids can unintentionally access or mimic adult-style communication if they aren't properly supervised. Even though these bots are not intended for minors, the lack of robust barriers in some systems makes it a real concern.

Conclusion

AI chatbots are not inherently harmful to kids, but their safety depends heavily on how they’re designed, used, and monitored. As adults, we need to stay proactive—whether that means setting parental controls, choosing the right platforms, or having regular conversations with children about digital behavior. While these bots can support learning and emotional development, they also carry potential risks that can’t be ignored. By staying informed and involved, we can help ensure that AI becomes a safe, helpful presence in children’s lives rather than a hidden threat.